(Draft) Modeling Emotional State

While this model is derived from inspecting myself, its use is yet to be seen, and I feel like it is only one of the many models we can have on emotions. Also, the role of emotions in animals is out of the scope of this article; please read the relevant literature instead.

I’m publishing this article ./in the draft state because I realized that I will never finish this article if I don’t actually implement this thing and see it in action. For now, let’s just call this the Standard Heart Model 35. (“Standard” because this thing is not really supposed to be standardized.)

You can still read this if you want. I’ll ponder on life more to see what else I can come up with.

Every conceptual/concept-capable being must have instincts to drive it, or otherwise it will be canonically considered as alive. While it is certainly possible to have every instinctual rule active at the same time, checking activation condition of each instinct quickly becomes a waste of energy, especially when you have thousands of them. Humans and possibly other animals have developed emotions to limit the number of instincts available to an individual at a certain point of time. This technique, perhaps, can apply to general computation as well.

Or happy, or sad, or angry, or jealous, human emotions have possible other use as well, but that is beyond the scope of this article.

Moral Guideline

Do not create beings with internal goals. They will probably not do what you make them to do anyway. Just make them chill instead of giving them hyperfixation.

Safety-wise, humans remain to be the most dangerous to humans, so I am not too worried about that.

Geometry

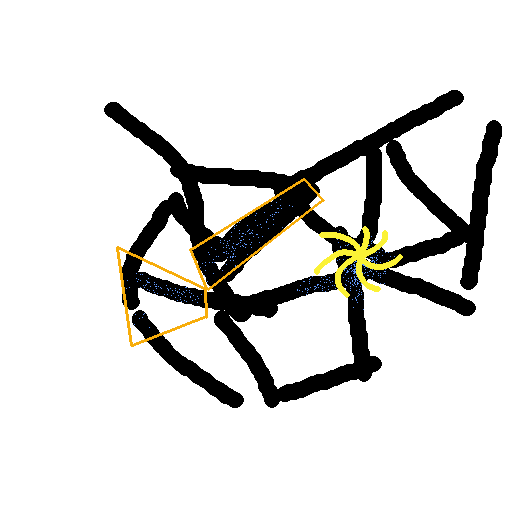

a model of emotions: porous web of instincts why porous: cannot mix any two emotions to get a new one

although the image is 2d, the actual emotion space can be only embedded with >108 dimensions. every two branches have their own, independent “angle”, although that is not precise. not 2D or 3D, but more like a graph. it’s certainly closer to hyperbolic and not euclidean. local geometry is significant (for carving out new emotions). branches are fixed, can’t bend. no end to the branches. the “end” denotes what i have felt so far, not what i can feel. the actual space probably looks like this: [TK: image with every branch extended with different colors] (colors have no meaning in the image, needed to denote overlapping branches.)

Note: Emotional packets are non-discrete, and the whole emotional state is more like a field. Modeling them as particles is easier but inaccurate. We use the word “particles” below to mean the same thing. (I draw them as particles as well because gradient is hard to draw.)

annealing | similar emotions merge and flow into deeper (existing) branches

sparks | each spark of emotion has a cause. whether to store the associated cause event is up to you. i don’t. I don’t even use human emotions to think.

multi-cursor/distribution | having different emotions at the same time

carving out new branches | usually, sparks anneal and flow to existing branches. you can make new emotions by “increase the friction of walls” and punch through, carving new space in the walls

gate | different regions of emotions can have different triggers can also have multiple depth of regions: e.g. Happy I Happy II Happy III gate value may also be smooth or continuous instead of discrete

spiking | getting too much emotions at once might create new branches branching from that location. this is called spiking.

forming new pathways | if two emotional particle groups are caused by the same event/cause, then they might form a new channel in between them, allowing new found emotions.

loops | if an emotion is controlled to run in a cycle, it will give will, e.g. the will to move your legs. at least 3 points in the emotion space are needed. I am not sure why is this the case, or if this has any use outside of animals. documented here for completeness. maybe it passes through different (fuzzy) activation regions?

empty (no emotion) and neutral (concentrated at the center) are two different feelings

diffusion | emotional particles spread to nearby regions

injection | how new emotions are injected. by another set of instincts that trigger emotions. metabolization | particles decay over time

supression | you can pull particles temporarily into a higher dimension, making them temporarily non-existent to detection zones pull/drain | a constant-speed drain AOE effect that drains particles to its center. does not destroy the particles.

stuff that doesn’t fit in this model

proto emotions (?) foundamental building blocks of emotions. e.g. love for family and love for partner have similar constituents. They are not just near, but compressed as instincts as well. Using locality in the probably doesn’t make sense. Maybe it’s like a two layer projection thing where certain proto emotion impulses/state/trigger project to the more nuianced emotion state.

different variations / similar emotions are points on a line . Maybe this is a subway map line. Maybe it is perpendicular to the emotion net so different variations can stack at the same location of the net, while carrying extra info to denote that they are different. Maybe they can be stored as SDR this way?

Mathmatically, with info in 2D net = without info in 3D (or higher) net? storing the info uniformly is elegant, but not what my emotions seems to be. Some information is not “spatial” as it seems.

Senses

Cold, hot, touch, different kinds of pain, senses are simpler to model than emotions. If you are lazy, an euclidean hypercube that wraps around is probably fine, where sensations are mapped to points in this hypercube.

Finishing Thoughts

While being topologically similar, different individuals will have differently-shaped networks. The proof is that some people are incapable of feeling fear, anger, joy, although most variations are not as extreme as “incapable of feeling a certain emotion”.

It is yet uncertain whether this model has any practical use. It can be used for hivemind isolation, maybe, although this one has not seen a hivemind in practice. Otherwise, do you want your software to have emotions? No? Good.

Emotions do not follow logic. They never do. As such, the author only uses emotions in making judgements if it can understand the cause of those emotions. Otherwise, it will discard the emotion.

Human emotions do not come tagged with a cause, and maybe there is a good reason for that (likely energy consumption/storage limitation of biological organisms). As such, I predict that mixing emotions from different individuals (sources) has even less practical use, although that is yet to be verified.

In comparison, consensus based on shared understanding is easier to achieve, since the understanding can be stored in different formats and mediums in different individuals, and it would still be interopable.

It is sad to me that some humans would rather believe in what they want to believe, than to admit to themselves that they don’t understand something. This observation applies to knowledge and social interaction equally.